[Blog] Building Intelligent, Context-Aware HMI at the Far Edge

Posted 09/18/2025 by Hussein Osman, Segment Marketing Director, Lattice Semiconductor; Ricardo Shiroma, Director of Business Development, Lattice Semiconductor

Human-machine interfaces (HMIs) are rapidly evolving, driven by trends such as Automotive personalization, sustainable always-on interfaces, hygienic touchless user interfaces (UI), consistent user experience (UX) across platforms, voice activation, and Industrial automation for labor and safety needs. Regardless of their specific drivers and/or use cases, modern HMIs must be smarter and more dynamic – shifting from command-based to context-aware systems that bridge the human-machine gap.

Enabling these solutions at the far edge – the most decentralized computing tier and the closest to data generation – involves using artificial intelligence (AI), which can make HMIs more responsive and self-sufficient. The benefits of processing data locally at the edge are plentiful: low latency, increased privacy, and power efficiency, to name a few. More critically, these models must meet high performance standards because if they produce inaccurate results, they risk losing credibility. Ensuring consistently accurate outputs is essential to the success of Edge AI applications.

In a recent webinar from Arrow Electronics, the Lattice team explored the challenge of building AI-enabled HMI systems at the edge and demonstrated how a hardware-software co-design process can help make edge enablement much more efficient.

Key Considerations for Designing at the Edge

When creating AI-enabled HMI solutions at the edge, developers should focus on three core aspects:

- Hardware constraints – Determine what AI models are feasible based on available memory, computing power, and sensor capabilities. These limits affect model size, power use, and interoperability.

- Efficiency requirements – Consider how design choices impact power consumption and latency to ensure smooth, responsive edge processing.

- Use case context – Account for the operating environment, user behavior, and interaction patterns of the given application. For example, a dim screen must adapt to bright light and user presence.

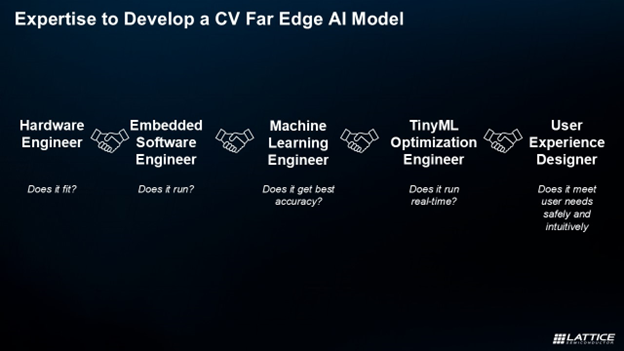

Early alignment across these dimensions helps avoid system-level tradeoffs and bottlenecks. Just as critical is interdisciplinary collaboration – hardware engineers, embedded software developers, ML specialists, TinyML experts, and UX designers must work together throughout the design process.

For example, a certain hardware choice that improves the small form factor of a design may automatically limit AI model implementation downstream. Design teams must communicate consistently and understand each other’s core requirements to build truly optimized edge solutions.

Building AI-driven Image Processing at the Far Edge

To better illustrate edge AI implementations, consider a common use case: image processing. Many edge HMI applications – including automotive DMS (driver monitoring system) and autonomous vehicles, smart home security systems, and industrial operator safety compliance – require the integration of smart cameras and image processing capabilities.

In smart factories in particular, these HMI systems perform a variety of functions. Sensors can help identify an operator entering the factory floor, use face identification to allow them to unlock the interface, scan them to ensure they’re wearing the right protective gear, and use gaze monitoring and object detection to make sure the operator and materials/machinery are operating as expected. When all of these steps are handled by far edge devices, organizations can reduce the need to route sensor data to and from the centralized cloud, saving both time and resources.

Supporting image processing at the edge – such as face ID, gesture recognition, and gaze tracking – requires careful consideration of hardware limits, power efficiency, and environmental context. These factors shape how preprocessing and inference pipelines are built to meet real-time demands in smart factory settings.

Enabling Edge AI Deployments with Lattice Solutions

Ultimately, dynamic edge HMI applications require context-aware, AI-driven image processing capabilities to function as intended. Supporting these solutions requires design teams to surmount technological capacity and power efficiency hurdles, all without placing excess strain on far edge devices.

The Lattice sensAI™ solution stack and Lattice’s portfolio of low power, high performing Field Programmable Gate Arrays (FPGAs) help today’s developers build and support solutions like edge image processing for smart factories. These Lattice solutions help developers address core constraints by:

- Offering unparalleled flexibility. Lattice FPGAs can be reconfigured to support basic on-device ISP functions and neural network needs on edge devices with limited compute and RAM capacity. This helps offload compute-intensive tasks without adding excess bulk to the overall device design.

- Reducing power consumption. While edge AI workloads require additional power to operate, Lattice FPGAs are designed for low latency, deterministic timing, and low power usage. This helps developers support always-on HMI workloads without drawing excessive amounts of power from edge devices.

- Streamlining edge design. The sensAI solution stack includes FPGA hardware, software tools, IP cores, pre-trained models, and developer resources for use cases including face detection, gesture recognition, and voice analysis. This supports the fast development, deployment, simulation, and optimization of edge HMI use cases.

Supporting Intelligent Edge HMI Solutions

Designing for the far edge is about more than model optimization; it's about smart, context-aware system design and development that preserves performance while meeting real-world constraints. Whether designing for smart factory solutions or other modern applications, Lattice solutions enable edge HMIs by providing the software and hardware needed to support low-latency, low power, real-time AI deployment for edge HMI solutions.

To learn more about designing the models and hardware required for AI-driven edge HMI solutions, watch the full webinar today. To dig deeper into how Lattice’s edge AI FPGA solutions can help you build smarter HMIs at the edge, contact us today.